Five meta-strategies for building with LLMs

LLMs are easy to prototype with, and have dramatically lowered the entry barrier to AI. LLMs have made many fairly complex tasks (not too long ago) really approachable.

Consider that in the pre-LLM world, to analyze a user's sentiments typically required (i) a data scientist or ML engineer, and (ii) quite some effort to gather sample user messages and get them labeled, and (iii) yet more effort to train a model, optimize it, and deploy it. Now, we can write code like this within a minute and get really good results for cheap:

def is_user_upset(user_message: str) -> bool:

client = OpenAI()

prompt = "You will be given a user's message to a company's technical support department. Respond with 1 if the user is likely to be upset, and 0 otherwise. Do not respond with any other values."

completion = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": prompt},

{

"role": "user",

"content": user_message

}

]

)

return True if completion.choices[0].message == "1" else FalseUnfortunately, as anyone who has tried to deploy LLM-powered software before, "easy to prototype" doesn't mean "easy to bring to production". In fact, there seems to be some kind of absurd inverse relationship between the two – sometimes the easier it is to prototype something, the harder it is to productionize it!

Most business leaders and PMs, however, haven't quite received that memo. Appetite and funding for AI – both amongst VCs and within most large enterprises – is sky high, and the terms "AI" and "LLMs" are often conflated. If you are an engineer, you will most likely receive some request to build an LLM-based service or solution within the next twelve months.

And when you get that request, please consider these five "meta strategies" for building with LLMs.

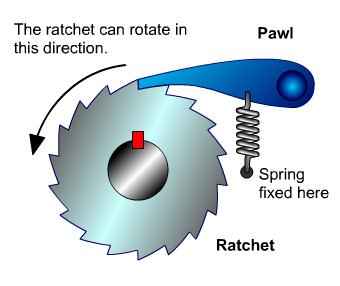

Strategy 1: Follow a 'ratchet model' of software development

My colleague Ariya is a strong proponent of the ratchet model. It is a really effective way of thinking about any big and complex engineering problem, and breaking it down into more achievable and manageable chunks.

So how does it work?

Imagine that one fine day, your PM comes to you with this huge product requirement: "A chatbot that can converse in full-duplex speech, answering questions in real-time from 20 different data sources."

You could start building exactly that, and deliver something in a couple of months. You could task someone to handle the streaming infrastructure, someone else to handle text-to-speech (TTS) and speech-to-text (STT) and everything voice-related, another person to perform ETL on the 20 data sources, and one last person to build the RAG system on top of the 20 data sources.

And yes, it might just go according to plan.

There will however most likely be some hiccups along the way. And after three months, what if something fails spectacularly – what if the conversation handling is spotty? What if the system can't reliably answer questions for ten of the data sources? What if the response time is way too slow for effective real-time conversation?

In this case, building a rocket that "shoots for the moon" and failing will result in a precipitous and fatal fall back to the earth.

The ratchet model is a better approach in this case. Instead of shooting for the moon directly, we could break this project up into several ratchets:

- Ratchet 1: Build a simple app that can respond "hello world".

- Ratchet 2: Load in data from one data source, and have the app answer questions about that single data source.

- Ratchet 3: Support two data sources separately. Remember those cassette tapes of yore where you could only play track A or track B, by pressing a button?

- Ratchet 4: Support two data sources together. (Any AI engineer will say that this is non-trivial to do well. How do you decide which data source(s) to use?)

- Ratchet 5: Stand up streaming infrastructure, and add in streaming.

- Ratchet 6: Add voice in and voice out.

- Ratchet 7: Support full-duplex conversation mode.

- Ratchets 8, 9 and 10: Scale it up to 20 data sources.

Each ratchet is a solid landing place – a "fall back" – should there be a problem with a subsequent ratchet. If ratchet 7 fails, for example, we will have ratchet 6 to rely on. So we'll still have an app that has voice-in and voice-out for two data sources – probably sufficient for a demo! (And certainly better than "nothing to demo"!)

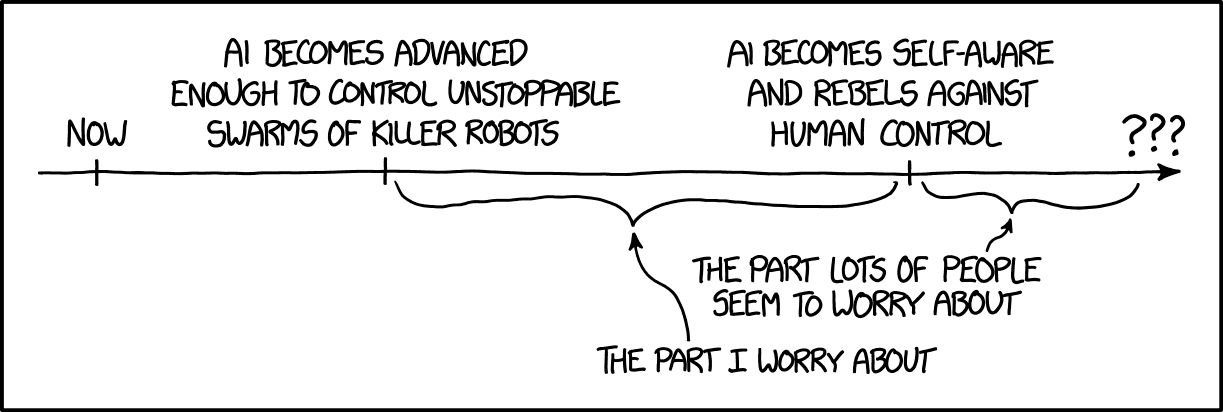

Here is the ratchet model applied another way: no need to worry about AI rebeling against human control, as long as it can't control unstoppable swarms of killer robots!

Strategy 2: Understand your LLMs' hallucinations

LLMs fundamentally do nothing but hallucinate. Every word you see in an LLM's output is a function of patterns between tokens that was established and "ingrained" in the model during training, or fine-tuning, or your prompt.

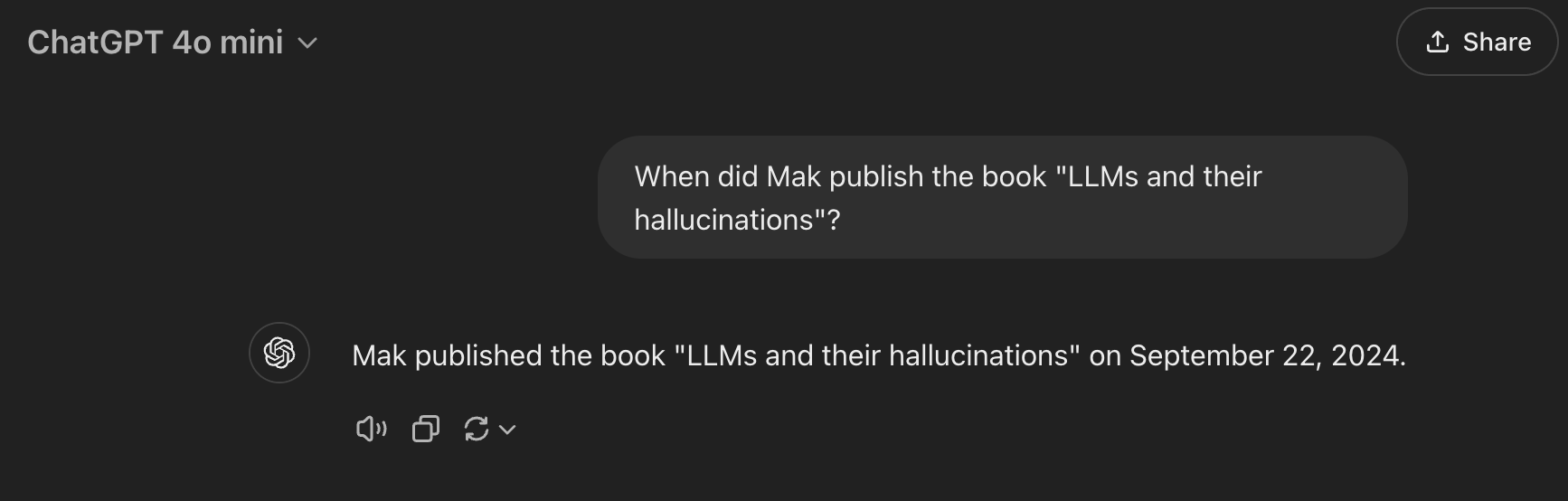

Here is a trivial example of an undesired hallucination:

Note: I have not published, and currently have no plans to publish, such a book.

Although newer models are getting better at controlling undesired hallucinations, they still remain a problem especially when dealing with facts, and if your prompt context is becoming saturated.

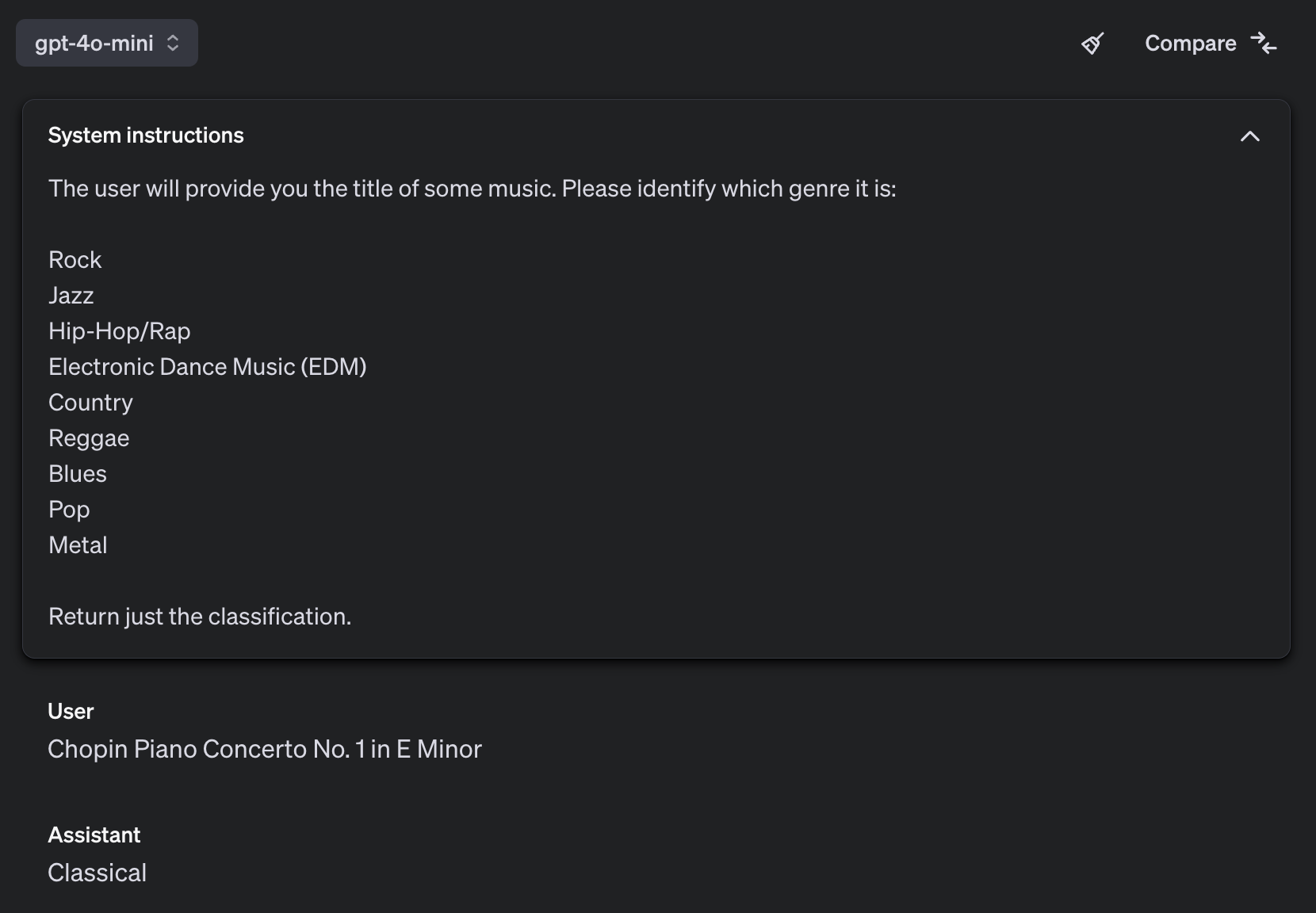

It is one thing for a model to hallucinate "facts", but the problem really begins when you are using function calling or otherwise expect your model to respond in a structured manner. Since we started this blog post with a simple binary classification example, let's notch it up to a multi-class problem. Imagine that you are trying to build a service that identifies users' musical taste, and as part of your service you need to classify some songs in each user's Spotify history. This is something very real that might happen:

Note that Classical is not in our prompt, yet gpt-4o-mini still returned it anyway. gpt-4o does a little better, returning "N/A" or "None" depending on your temperature settings. Either way, if you have downstream code that depends on the LLM output being one of the nine genres, you will run into some errors.

There are several possible ways of dealing with this problem.

- Write additional code to ensure that the LLM output is within one of your expected categories. If it isn't, retry N times.

- Strengthen (and lengthen!) your prompt – try to guide and focus the model to return only the desired categories and nothing else. Add in chain of thought prompting, inner monologues, examples, and so on.

- Cram more possible categories in there.

- Enforce structured output, if your model supports it. See docs or a blog post for the Gemini family (where you can even provide enums!), or docs for OpenAI's gpt-4o family or later.

None of these options are perfect. More code equals more complexity, plus your LLM output is unlikely to change if your prompt is fixed and your temperature is low. Retrying introduces latency. Longer prompts run the risk of context saturation and poorer instruction following. Cramming more options in your prompt increases the odds that you'll get a match, but probably worsens precision in many cases. And if you use an older model family (that may also be better at reasoning... like gpt4-turbo...) you are out of luck.

While this example is trivial, there are some very real repercussions. When building the personal AI agent that is Ario, my team often went back and forth with our product team about what features we should support.

Here is a representative conversation:

Product: Hey! Can we have our agent support creating time-based reminders, location-based reminders, and calendar events? Oh also event-based reminders. (But not event-based calendar events.) Also allow users to modify time-based reminders and calendar events but not location-based reminders, and to delete all reminders but not calendar events.

AI Engineers: Our models will be very confused... can we please just support deletion of everything, and modification of everything???

Product: Nope... deleting calendar events is too risky!

Well, at least this is still a fairly straightforward classification problem. How about an even more painful scenario:

Product: Let's build an AI agent that users can talk to about their personal data, but not general queries (we don't want to be a free ChatGPT).

AI Engineers: How do you distinguish between a general query and a query about personal data?

Product: ...

In the end, we decided to have our classifier support all possible options - creation, modification, and deletion for all types of reminders and calendar events. As we predicted, if we had listed all of the original permutations, the model would occasionally have trouble sticking to them. That was unacceptable for a production system.

We also decided to support both "general chat" and "personal data chat". In fact we could not accurately distinguish between them – natural language is just too nuanced – and we built our agent in a way that does not depend on such an impossible classification.

If you are a PM, know that it is helpful to engage your AI engineering team as early as possible in the product development process – well before you start writing PRDs. A good AI team should be able to understand their models' hallucinations, and help you cut away options that are simply too difficult to achieve reliably with LLMs. A good AI system is one that does not "resist" the LLMs too much.

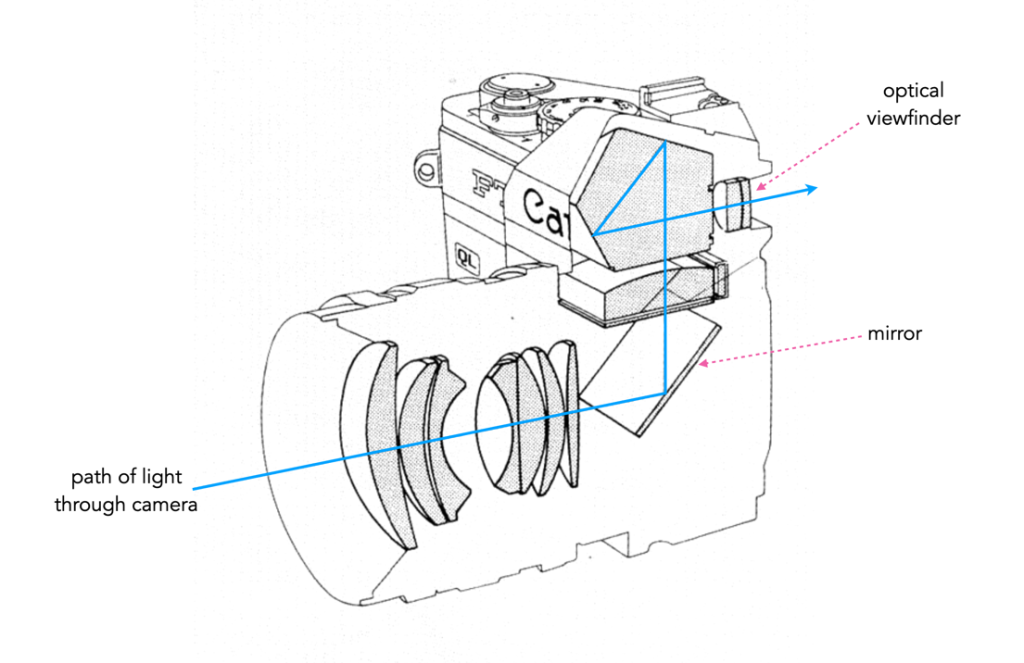

Here is an analogy - especially for the photography enthusiasts out there.

Imagine a ray of light coming into your camera. You could "steer" it by letting it pass through different kinds of glass and reflecting off prisms and other mirrors. However, each time the light's path changes course, the ray becomes weaker and by the time it hits your camera's sensor, you'll have lost several stops of light.

Strategy 3: If you can't win the game, change the rules. (Or change the game!)

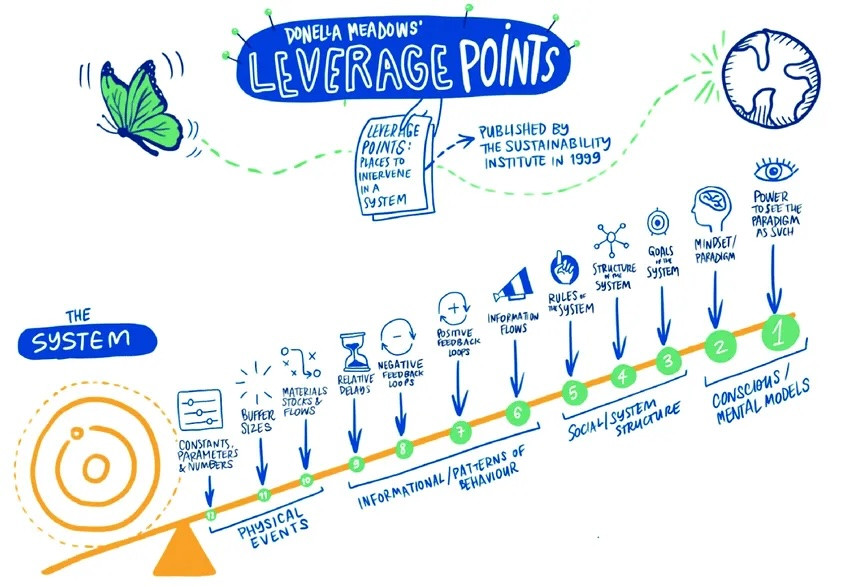

I have always been fascinated by the fields of systems thinking and system dynamics. Over the years, I have read a lot of writing by luminaries in those fields such as Jay Forrester and Donella Meadows.

Specifically, Meadows in her book "Thinking in Systems" argues that there are twelve places to intervene in a system - in any system. She calls these "leverage points".

These are great business principles, and we can also broadly apply them to engineering and AI. Here are some examples:

- Applied to testing: If you can't pass the test, change the test!

- Applied to information retrieval: If you're building a RAG system and are having problems retrieving some data reliably, change the data! For example - by further enriching or transforming the data, changing the data format, revisiting your chunking strategy, attaching more metadata to each chunk, etc.

- Applied to intent classification: If you're not able to classify reliably between classes D and E in a multi-class classification system, perhaps just combine them into one class. At Ario we had issues trying to classify users' queries as "chitchat" and "calendar chat" (i.e. chat about their calendar data). (This is really splitting hairs, right?) We ended up combining them into one class, and revisiting how we handled both kinds of queries downstream.

- Applied to an Agent's capabilities: If it is a hard task to solve from a natural language processing lens, can it be solved with some simple UX, or with some hard-coded processing? If is difficult to get the agent to refuse to do X, can we just let the agent do X instead? And if all else fails... can we change product requirements or user expectations?

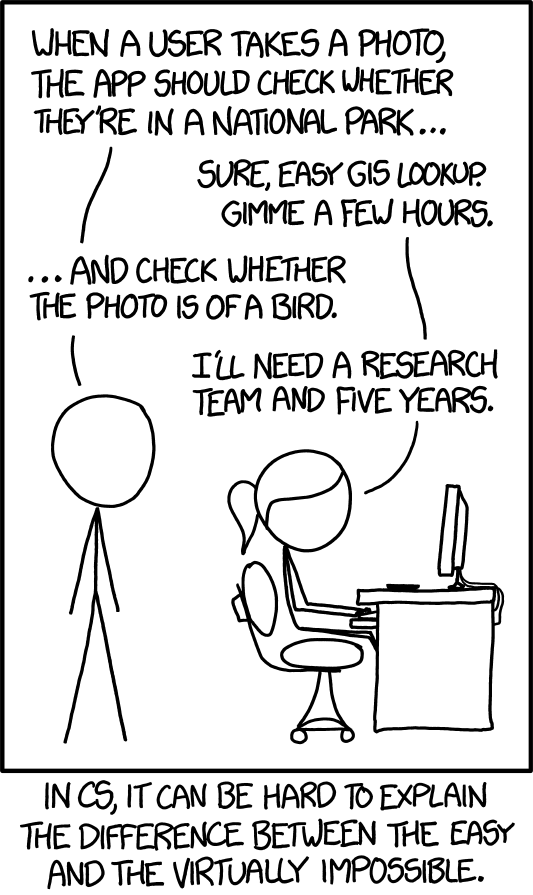

Many of you will recognize the scenario above – when the product team gives a two-part requirement, where one part is exponentially more difficult than the other one. Many computer science problems appear similarly complex (or similarly easy!) at first glance or to the untrained observer, but are so different.

So when your PM asks you to build something really complex, offer to build something else that appears similarly complex but is orders of magnitude easier. Change the rules of the game!

Strategy 4: LLMs are just one of many tools in the AI engineer’s toolbox. Don't forget the others!

AI != LLMs. Even if you are developing a generative AI product, it does not mean that the entire product has to be LLM-driven. More "traditional" ML and data science approaches are still relevant.

One example concerns testing and evaluation. Many people would immediately jump to use LLMs to evaluate LLM output – why not automate everything? While LLMs are great for evals, they may not always be the best solution. Let's say you are building a chatbot, and wanted to ensure that the chatbot is able to answer a bunch of general knowledge questions. The truth is that you don't need an LLM to evaluate your chatbot's answers to questions like: "What is the largest planet in the solar system?", "How many Rs are there in strawberry?" and "Who is the CEO of Tesla?". Since all good answers to these questions will contain "Jupiter", "3" (or "three") and "Elon Musk", you may as well do a simple substring match.

Another example where an LLM-only approach is insufficient is when you are performing RAG over some kinds of less-textual data sources. While LLMs are great at generating text, they will struggle with data that is numerically intensive, domain specific, or otherwise less aligned with their training data. In such cases, it may be helpful to apply some traditional data cleaning and wrangling methods to enhance the raw data, before feeding it to the LLM.

Let's say you are working with some health data – e.g. a log of heart rate measurements from a smart watch – and you expect to answer natural language queries from the user such as heart rate averages, variability, etc. Instead of letting the LLM do the math (which is a really bad idea), why not pre-compute all of these metrics, and expose them to the LLM? You could even compute the metrics for different time periods and all the LLM will have to do is to (literally) recite one of these metrics if the user asks.

Let's not forget feature engineering. Again, in the case of RAG, if you are working with many large documents you will probably need to chunk them at some point. Rather than selecting relevant chunks based on semantic similarity (which often does not capture true contextual relevance), consider extracting pertinent features and tagging them as part of each chunk's metadata. You can then rank the metadata as appropriate, and use that in combination of semantic similarity to fetch the most relevant chunks for your retrieval application.

The broader point is that AI engineering includes not only LLMs, but also requires other kinds of AI, ML and data science techniques.

This strategy also lends itself to hiring. A good AI engineer should be familiar not only with LLMs, but also have a solid grounding in "traditional" ML. So structure your interviews accordingly!

Strategy 5: Build not for today, but for tomorrow.

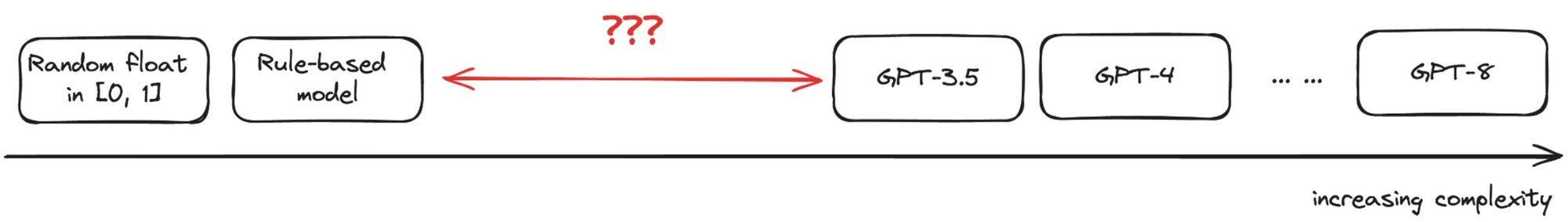

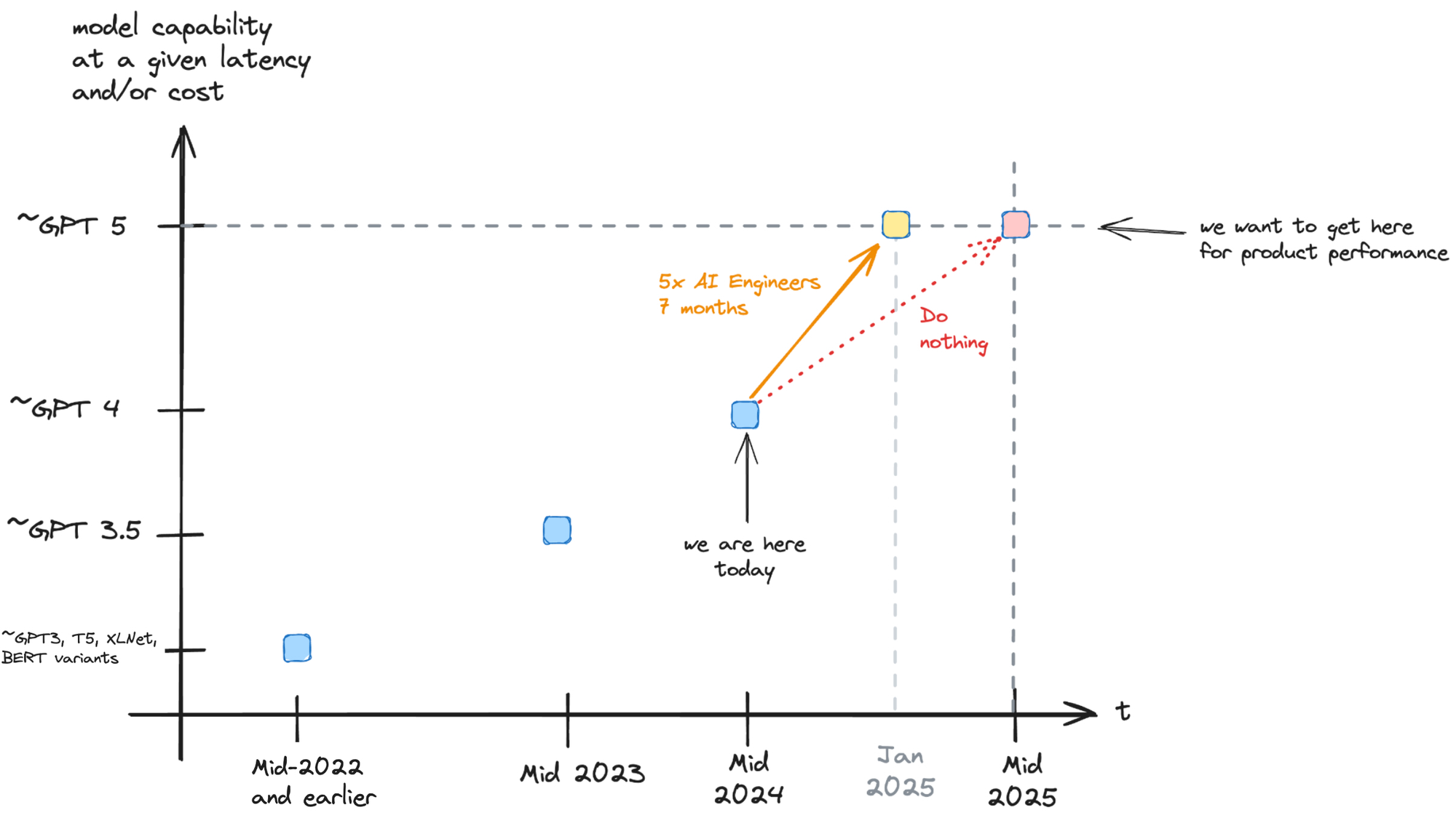

AI is advancing quickly. What is difficult today may well be straightforward tomorrow. To look ahead, simply consider how far we have come in the past few years. Before mid-2022, we had GPT3, T5, XLNet, and various BERT variants as the most state-of-the-art language models. By early/mid 2023, the GPT 3.5 API was readily accessible – that brought not only language processing and understanding but also some surprisingly capable reasoning capabilities. (And we had hundreds of other really capable models at roughly ~GPT3.5 level emerge, in varying shades of "openness".) And of course by late 2023/early 2024, GPT4 and GPT4-turbo was the norm, with unmatched reasoning and instruction-following capabilities – until o-1 came out.

Prices are also dropping rapidly. Consider GPT-3.5. In June 2023, GPT-3.5-16k cost $3.00/1M input tokens. This dropped to $1.50 in November 2023, and then further to $1.00 in January 2024. Now, six months later, GPT-4o-mini is available for $0.15/1M input tokens – and this is a much more powerful model than GPT-3.5 with a 8x context window! We also have Gemini 1.5 Flash-8B, which has similar performance to 4o-mini and costs even less: $0.0375/1M input tokens. (Google is also dangling free fine-tuning.)

Progress is great, but rapid progress does cause some challenges if you are a developer. It is now mid-2024, and let's say that we want to build a really advanced AI agent that involves "next-generation" (GPT-5?) capability. Would it be better to devote a whole team of AI engineers to build it over six or seven months, or would it be better to work on something else and let GPT-5 come out in (say) a year? Or let's say we want to aim to reduce the cost for our product – should we devote several engineers and several weeks of time to that effort, or simply wait for competition to force price to drop?

This is ultimately a judgement call. There isn't a set rule for deciding what to invest in vs. what to let the rest of the industry build. Invest in things that will provide lasting advantage.

Member discussion